X4500 and ZFS Configuration

I was recently asked to help install and configure a Sun X4500 ("Thumper"). The system has dual Opterons, 16GB RAM, and 48 500GB SATA disks. The largest pool I'd configured before this project was a Sun J4200: 24 disks.

UPDATED: 2010-01-31 2245

RAS guru Richard Elling notes a couple bad assumptions I've made in this post:

- That disks are faster than the controller, they are not, so you gain nothing by spreading across controllers

- That controller failure is a worry. It is not: ZFS RAID recommendations: space vs U_MTBSI

Much thanks to Richard for correcting me!

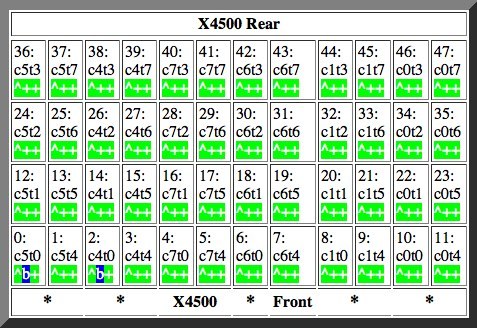

The Thumper controller/disk setup looks like this:

That's six controllers, with 46 disks available for data.

The mirrored ZFS rpool are on c5t0 and c4t0. Placing the mirror halves

across controllers allows the operating system to survive a controller failure.

ZFS supports two basic redundancy types: Mirroring (RAID1) and RAIDZ (akin to RAID5, but More Gooder). RAIDZ1 is single parity, and RAIDZ2 double. I decided to go with RAIDZ2 as the added redundancy is worth more than capacity: The 500GB disks can trivially be swapped out for 1TB or 2TB disks, but the pool cannot be easily reconfigured after creation.

From the ZFS Best Practices and ZFS Configuration guides, the suggested RAIDZ2 pool configurations are:

- 4x(9+2), 2 hot spares, 18.0 TB

- 5x(7+2), 1 hot spare, 17.5 TB

- 6x(5+2), 4 hot spares, 15.0 TB

- 7x(4+2), 4 hot spares, 12.5 TB

ZFS pools consist of virtual devices (vdev), which can then be configured in various ways. In the first configuration you are making 4 RAIDZ vdevs of 11 disks each, leaving 2 spares.

(ZFS pools are quite flexible: You could set up mirrors of RAIDZs, three-way mirrors, etc. In addition to single and dual parity RAIDZ, RAIDZ3 was recently released: Triple parity!)

Distributing load across the controllers is an important performance consideration but limits possible pool configurations. Preferably you want each vdev to have the same number of members. Surviving a single controller failure is also required.

RAIDZ2 is double parity, so you lose the "+2" disks noted above, but the vdev

can sustain two disk losses. The entire pool can then survive the loss of

vdevs*2 disks. This is pretty important given the size of the suggested vdevs

(6-11 disks). The ZFS man page recommends that RAIDZ vdevs not be more than 9

disks because you start losing reliability: More disks and not enough parity to

go around. The likelihood of losing more than two disks in a vdev with 30

members, instance.

The goal is to balance number of vdev members with parity.

A vdev can be grown by replacing every member disk. Once all disks have been replaced, the vdev will grow, and the pools total capacity is increased. If the goal is to incrementally increase space by replacing individual vdevs, it can be something of a hassle if you have too many (say, 11) disks to replace before you get any benefit.

The process for growing a vdev is: Replace a disk, wait for new disk to resilver, replace a disk, wait for new to resilver, replace a disk... it is somewhat time consuming; not something you want to do very often.

So the tradeoff here is really:

- More initial space (18TB) but less trivial upgrade (11 disks), and ok performance

- Less initial space (15TB) but more trivial upgrade (7 disks), good performance

- Less initial space (12.5TB) but more trivial upgrade (6 disks), best performance

With the 6x(7) or 7x(6) configurations we have four free disks. One or two can be assigned to the pool as a hot spare. The other two or three disks can be used for:

- A mirror for tasks requiring dedicated I/O

- Replaced with 3.5" SAS or SSDs for cache devices

I'll discuss Hybrid Storage Pools (ZFS pools with cache devices consisting of SSD or SAS drives) in another post. They greatly affect pool behavior and performance. Major game-changers.

Unsurprisingly the 12.5TB configuration has the highest RAS, and best performance. It loads disk across controllers evenly, has the best write throughput, is easiest to upgrade, etc.

Sacrificing 6TB of capacity for better redundancy and performance may not be in line with your vision of the systems purpose.

The 15TB configuration seems like a good compromise. High RAS: Tolerance to failure, good performance, good flexibility, not an incredibly painful upgrade path, and 15TB isn't anything to sneer at.

(Note: After parity and metadata, 13.3TB is actually useable.)

The full system configuration looks like this:

pool: rpool state: ONLINE scrub: none requested config: NAME STATE READ WRITE CKSUM rpool ONLINE 0 0 0 mirror ONLINE 0 0 0 c5t0d0s0 ONLINE 0 0 0 c4t0d0s0 ONLINE 0 0 0 errors: No known data errors pool: tank state: ONLINE scrub: none requested config: NAME STATE READ WRITE CKSUM tank ONLINE 0 0 0 raidz2 ONLINE 0 0 0 c0t7d0 ONLINE 0 0 0 c1t7d0 ONLINE 0 0 0 c6t7d0 ONLINE 0 0 0 c7t7d0 ONLINE 0 0 0 c4t7d0 ONLINE 0 0 0 c5t7d0 ONLINE 0 0 0 c0t4d0 ONLINE 0 0 0 raidz2 ONLINE 0 0 0 c0t3d0 ONLINE 0 0 0 c1t3d0 ONLINE 0 0 0 c6t3d0 ONLINE 0 0 0 c7t3d0 ONLINE 0 0 0 c4t3d0 ONLINE 0 0 0 c5t3d0 ONLINE 0 0 0 c1t4d0 ONLINE 0 0 0 raidz2 ONLINE 0 0 0 c0t6d0 ONLINE 0 0 0 c1t6d0 ONLINE 0 0 0 c6t6d0 ONLINE 0 0 0 c7t6d0 ONLINE 0 0 0 c4t6d0 ONLINE 0 0 0 c5t6d0 ONLINE 0 0 0 c6t4d0 ONLINE 0 0 0 raidz2 ONLINE 0 0 0 c0t2d0 ONLINE 0 0 0 c1t2d0 ONLINE 0 0 0 c6t2d0 ONLINE 0 0 0 c7t2d0 ONLINE 0 0 0 c4t2d0 ONLINE 0 0 0 c5t2d0 ONLINE 0 0 0 c7t4d0 ONLINE 0 0 0 raidz2 ONLINE 0 0 0 c0t5d0 ONLINE 0 0 0 c1t5d0 ONLINE 0 0 0 c6t5d0 ONLINE 0 0 0 c7t5d0 ONLINE 0 0 0 c4t5d0 ONLINE 0 0 0 c5t5d0 ONLINE 0 0 0 c4t4d0 ONLINE 0 0 0 raidz2 ONLINE 0 0 0 c0t1d0 ONLINE 0 0 0 c1t1d0 ONLINE 0 0 0 c6t1d0 ONLINE 0 0 0 c7t1d0 ONLINE 0 0 0 c4t1d0 ONLINE 0 0 0 c5t1d0 ONLINE 0 0 0 c5t4d0 ONLINE 0 0 0 spares c0t0d0 AVAIL c1t0d0 AVAIL errors: No known data errors

The command to create the tank pool:

zpool create tank \ raidz2 c0t7d0 c1t7d0 c6t7d0 c7t7d0 c4t7d0 c5t7d0 c0t4d0 \ raidz2 c0t3d0 c1t3d0 c6t3d0 c7t3d0 c4t3d0 c5t3d0 c1t4d0 \ raidz2 c0t6d0 c1t6d0 c6t6d0 c7t6d0 c4t6d0 c5t6d0 c6t4d0 \ raidz2 c0t2d0 c1t2d0 c6t2d0 c7t2d0 c4t2d0 c5t2d0 c7t4d0 \ raidz2 c0t5d0 c1t5d0 c6t5d0 c7t5d0 c4t5d0 c5t5d0 c4t4d0 \ raidz2 c0t1d0 c1t1d0 c6t1d0 c7t1d0 c4t1d0 c5t1d0 c5t4d0 \ spare c0t0d0 c1t0d0

This leaves c6t0d0 and c7t0d0 available for use as more spares, for another pool or as cache devices.

I feel the configuration makes for a good compromise. If it doesn't prove successful or we've misjudged the workload for the machine, we have the ability to add cache devices without compromising the pool's redundancy.

That said, I'll be quite interested in seeing how it performs!